Announcing Nio

Nio is an experimental async runtime for Rust.

This project initially began as an experiment to explore alternative scheduling strategies.

Tokio use work-stealing scheduler, It is very complex scheduler and require a ton of bookkeeping. Sadly, replacing the Tokio scheduler isn't as simple.

Nio is designed with a modular architecture, Enables seamless switching between different scheduling algorithms.

Finding Alternative Scheduler

In most thread-based schedulers, Each worker thread is associated with its own task queue.

A straightforward approach to assigning tasks would be to distribute them evenly across the task queues. However, this approach doesn't work efficiently with a multi-threaded scheduler. Some worker threads might become overloaded while others remain underutilized, leading to imbalanced workloads. Another drawback is that if a single worker can handle all the tasks efficiently, distributing them across multiple threads add unnecessary overhead.

Least-Loaded (LL) Scheduler

The Least-Loaded scheduling algorithm is a simple yet effective strategy that addresses the issue of starvation. It achieves this by assigning new task to the worker that currently has the least workload.

When a new task is waken to be re-assigned, the scheduler assigns it to the worker that has the fewest tasks in its queue.

Current implementation use mpsc channel, containing just 150 lines of code!

This scheduling statergy is simple, fast, solve starvation.

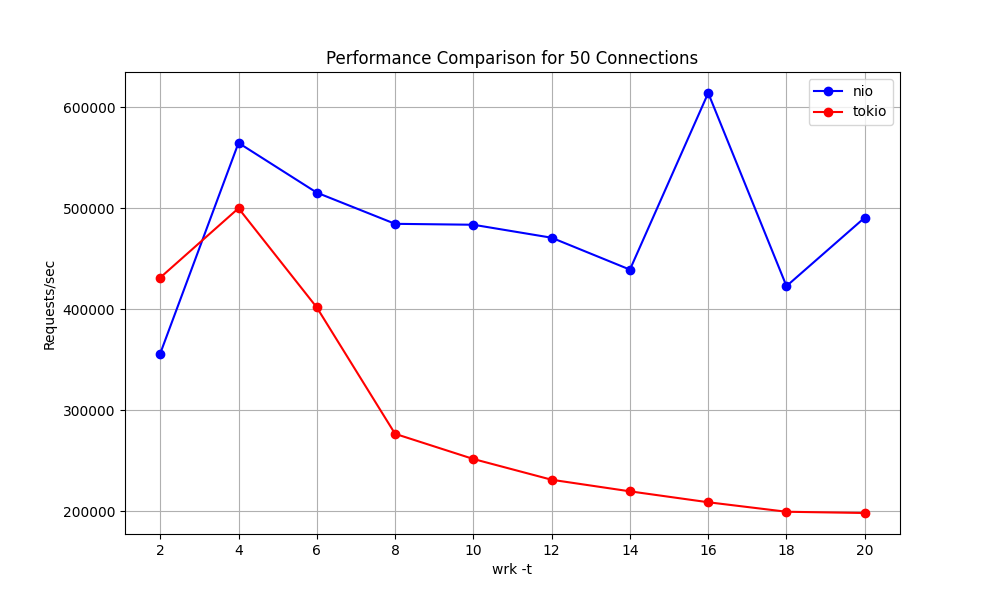

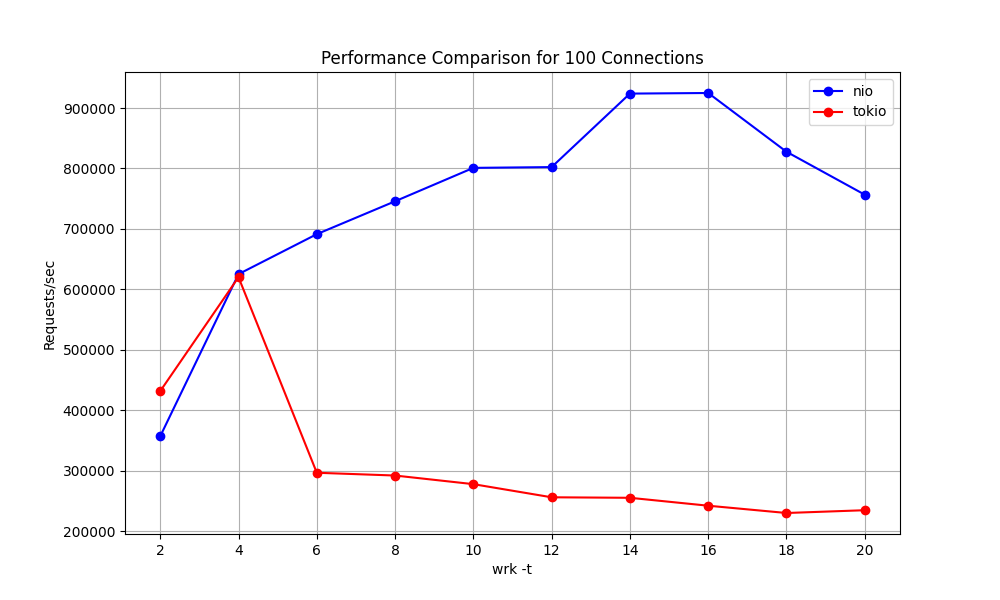

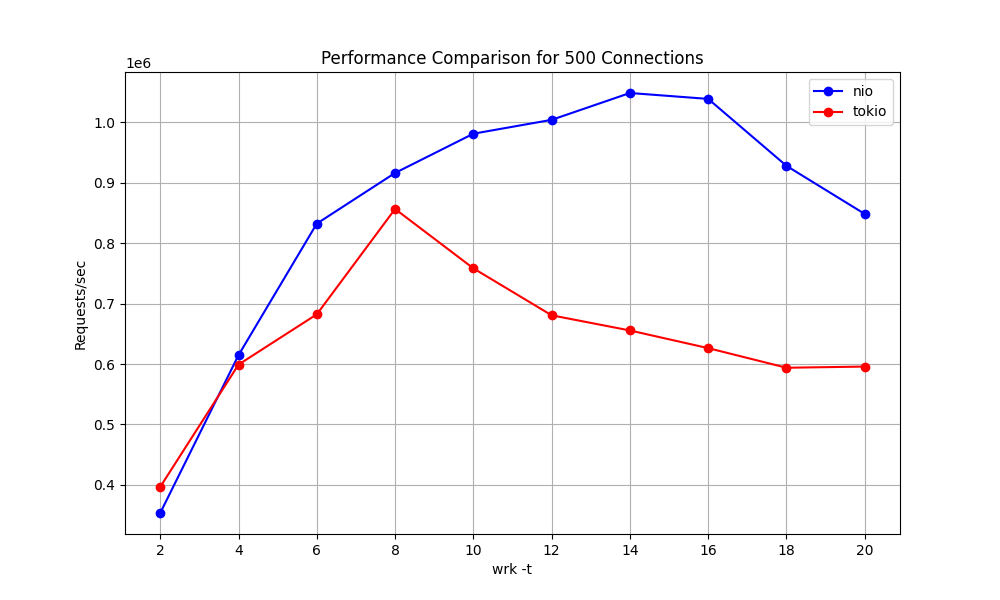

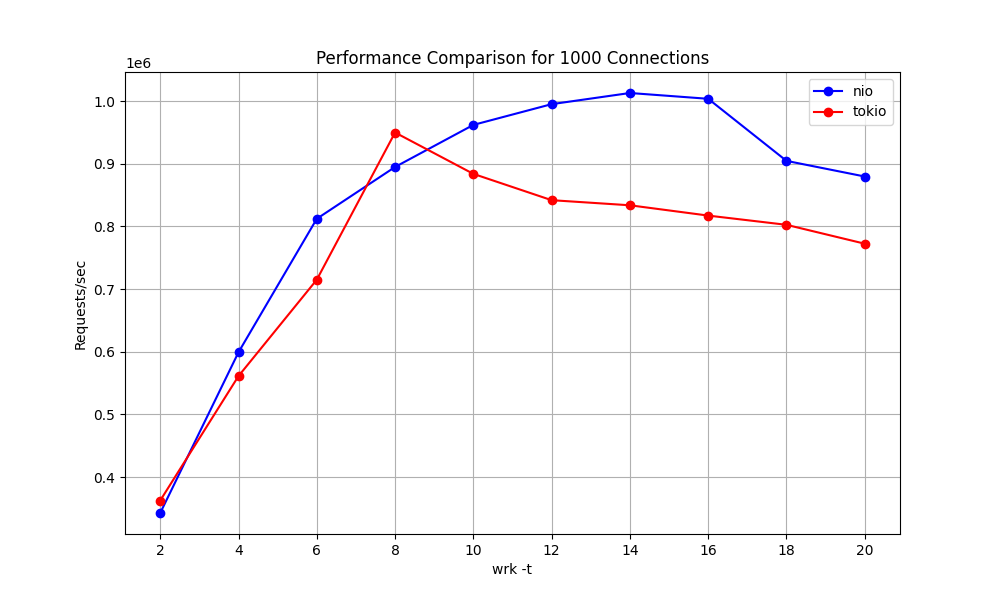

Benchmark

The LL scheduler shows promising performance improvements.

> cd

> cargo

> cargo

These days, whenever someone introduces a new runtime, it’s like a tradition to run an HTTP benchmark. So, we honor the tradition with a hyper "Hello World" HTTP benchmark ceremony. Using:

)

)

> wrk |||1000> -This benchmark is both Meaningless and Misleading, as no real-world server would ever just respond with a simple "Hello, World" message.

What's going on !? 😕

In work-stealing schedulers, when a local worker queue is empty, it fetches a batch of tasks from the shared global (injector) queue. However, under high load, multiple workers simultaneously attempt to steal tasks from injector queue. This leads to increased contention and workers spend more time waiting...

let's increase the connection to reduce contention.

Conclusion

None of these benchmarks should be considered definitive measures of runtime performance. That said, I believe there is potential for performance improvement. I encourage you to experiment with this new scheduler and share the benchmarks from your real-world use-case.